Note: since the topics were unrelated, Week 14 is split into two posts:

Wednesday, November 29: Ethical AI

|

Ben Shneiderman. Bridging the Gap Between Ethics and Practice: Guidelines for Reliable, Safe, and Trustworthy Human-centered AI Systems. ACM Transactions on Interactive Intelligent Systems, October 2020. PDF

|

Today’s topic is ethical AI, with a focus on human-centered AI (HCAI). From this perspective, AI is seen as amplifying the performance of humans.

Important to HCAI is the need for reliable, safe and trustworthy properties, through the collaboration of software engineers, companies, government, and society as a whole.

|

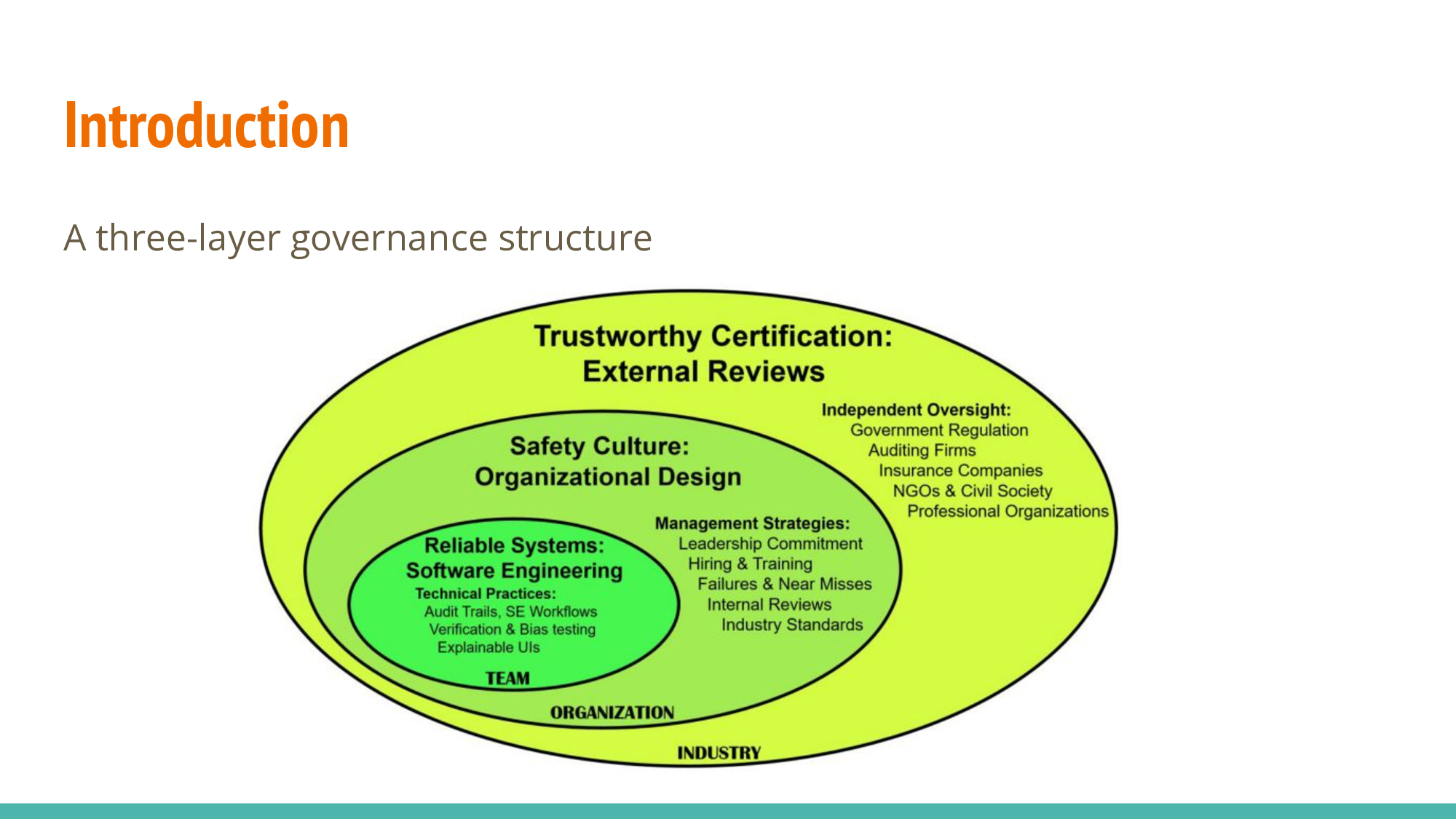

- Reliable Systems: Soft Engineering

- Safety Culture: Organizational Design

- Trustworthy Certification: External Reviews

|

Things that should be considered when developing ethical AI:

- Data quality

- Training log analysis

- Privacy and security of data

Example:FDR has quantitative benchmark to see if a plane is safe/stable, which can help in designing the next generation of products

Analogy of FDR to AI: We could get quantitative feedback of the product or strategy we want to test: What data do we need, how do we analyze log data (or select useful data from operation logs), how to protect data from being attacked, etc.

Through a similar approach, we can say that AI is safe through testing and logs, rather than just ‘take our word for it’

|

Software Engineering workflows: AI workflow requires goal-aligned update.

Verification and validation testing:

- Design tests align with expectations, prevent harms

- Goals of AI are more general or high-level than traditional software programs, so we need tests that are designed with user expectations rather than solely the technical details.

Bias testing to enhance fairness:

- Test training data for opacity, scale, harm.

- Use specialized tools for continuous monitoring.

- After we have a trained model, we still need testing to check the risk, and may need a specific team in the organization or external company to test safety of model (should be continuous).

|

Explainable user interfaces:

- Are difficult to achieve

- Ensure system explainability for user understanding, meeting legal requirements

- Intrinsic and post hoc explanations aid developer improvement.

- Design a comprehensive user interface, considering user sentiments

- Post hoc: no information about the technical details of the model, but rather need a broad level idea of the system

|

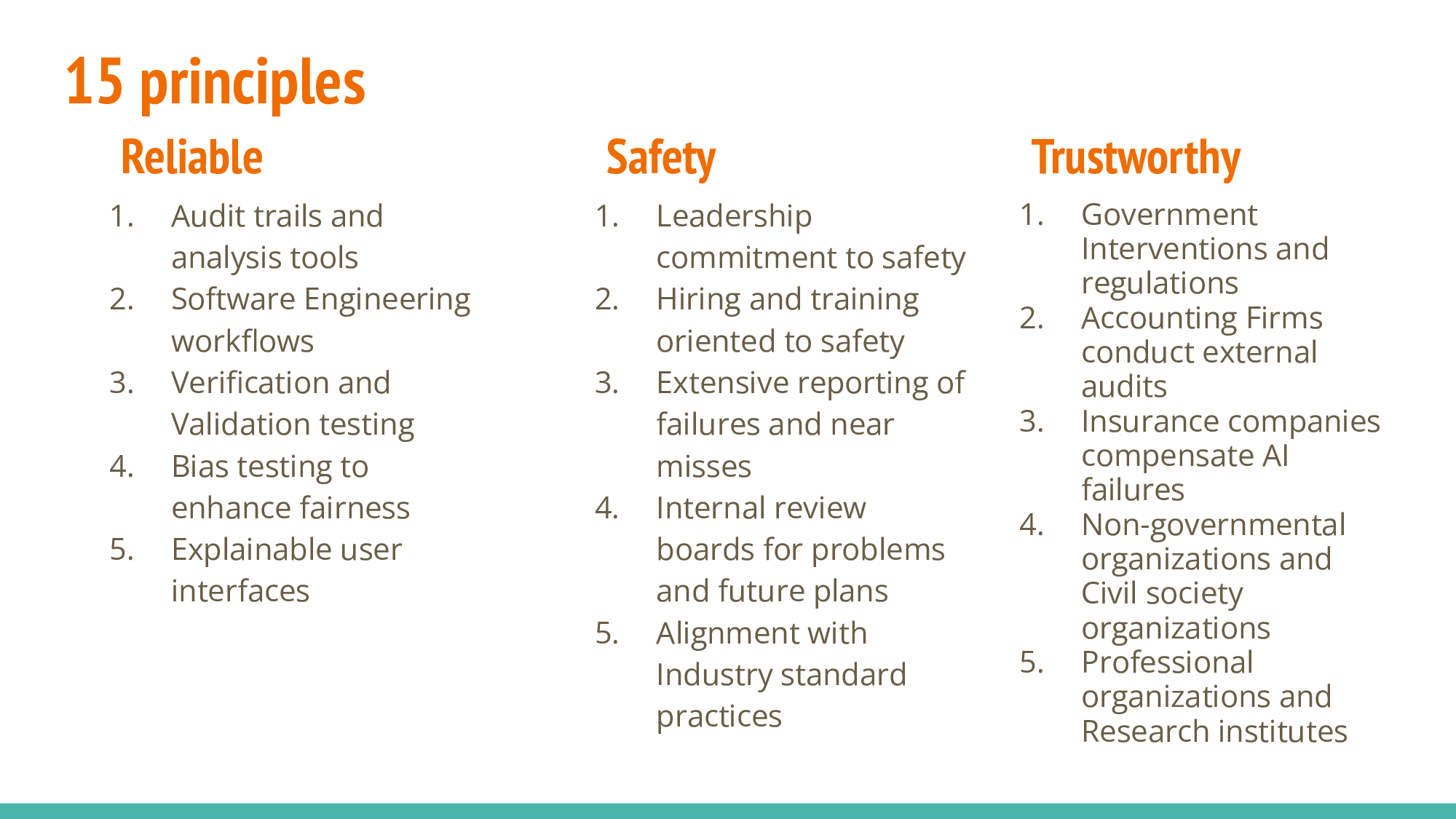

There are five principles to build safety cultures, which are mostly top-down approaches (see slides).

Leadership: create a safe team, make commitment to safety that is visible to employees so they know leaders are committed to safety.

Long-term investment: need safe developers to develop safe models.

Public can help monitor and improve as it creates public/external pressure, so companies may work harder to eliminate issues.

|

Internal Review Boards engage stakeholders in setting benchmarks and to make improvements for problems and future planning.

|

|

Trustworthy certification by independent oversight:

-

Purpose: Ensure continuous improvement for reliable, safe products. Helps to make a complete, trustworthy system.

-

Requirements: Respected leaders, conflict declaration, diverse membership.

-

Capacity: Examine private data, conduct interviews, issue subpoenas for evidence.

|

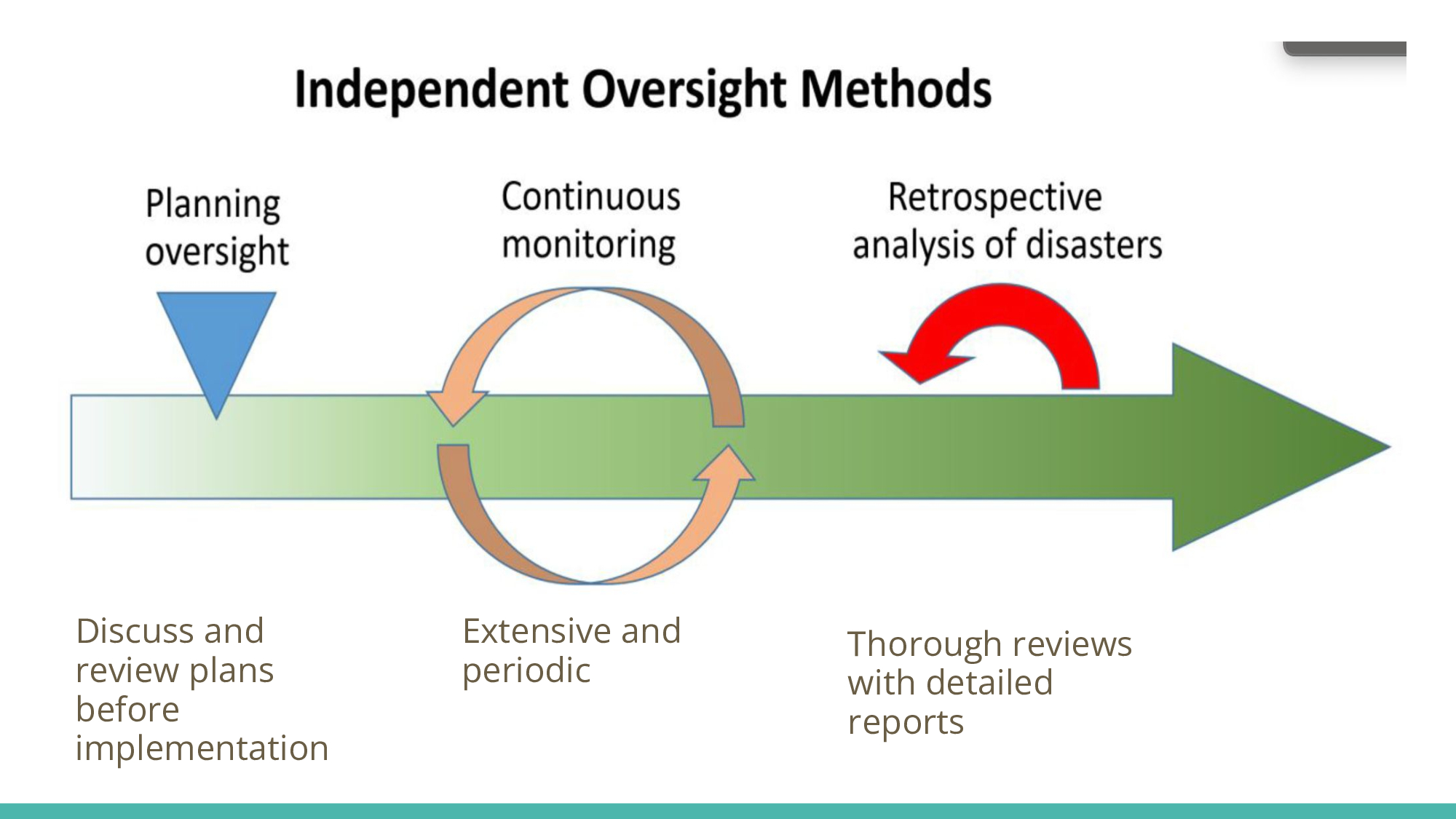

Independent oversight is structured around three core methods:

- Planning

- Monitoring

- Conducting reviews or retrospectives

|

There are five paths for Trustworthy certification

-

Government: Policy and Regulation, aligning with EU’s seven key principles(list on the top right) for transparency, reliability, safety, privacy, and fairness

-

Accounting Firms: Beyond the internal audits mentioned previously, external bodies should audit the entire industry

-

Insurance Companies: Adapting policies for emerging technologies like self-driving cars (details on next slide)

-

Non-government organizations: prioritizing the public’s interest

-

Professional organizations and research institutes

|

|

As an activity, we tried role playing where each group will play different roles and think about following 15 principles in terms of “ethical AI”.

Ethical Team:

- Diagnosis for skin cancer, dataset quality is reliable (bias-skin color, state-laws passing for collecting data)

- Various Metrics for evaluating AI

- Come up an agreement with patients, doctors

Healthcare Management/Organization:

- Reporting failures (missed diagnosis) for feedback

- Data security, gathering FP, FN cases for further training

- Educating staff

- Establishing accuracy/certainty of threshold for AI diagnosing skin cancer, checking the standard of professional verification

Independent oversight committee:

- Whether the dataset is not biased in every stage and is representing all race, gender, etc

- Data source should be considered carefully (online, hospital)

- Model explanation and transparency should be considered

- Privacy of personal information of both the dataset and the users

|

There are 15 principles each group can take into consideration for the role-playing discussion.

|

Reorienting technical R&D emphasizes oversight, robustness, interpretability, inclusivity, risk assessment, and addressing emerging challenges.

Proposed governance measures include enforcing standards to prevent misuse, requiring registration of frontier systems, implementing whistleblower protections, and creating national and international safety standards. Additionally, the accountability of frontier AI developers and owners, along with AI companies promptly disclosing if-then commitments, is highlighted.

|

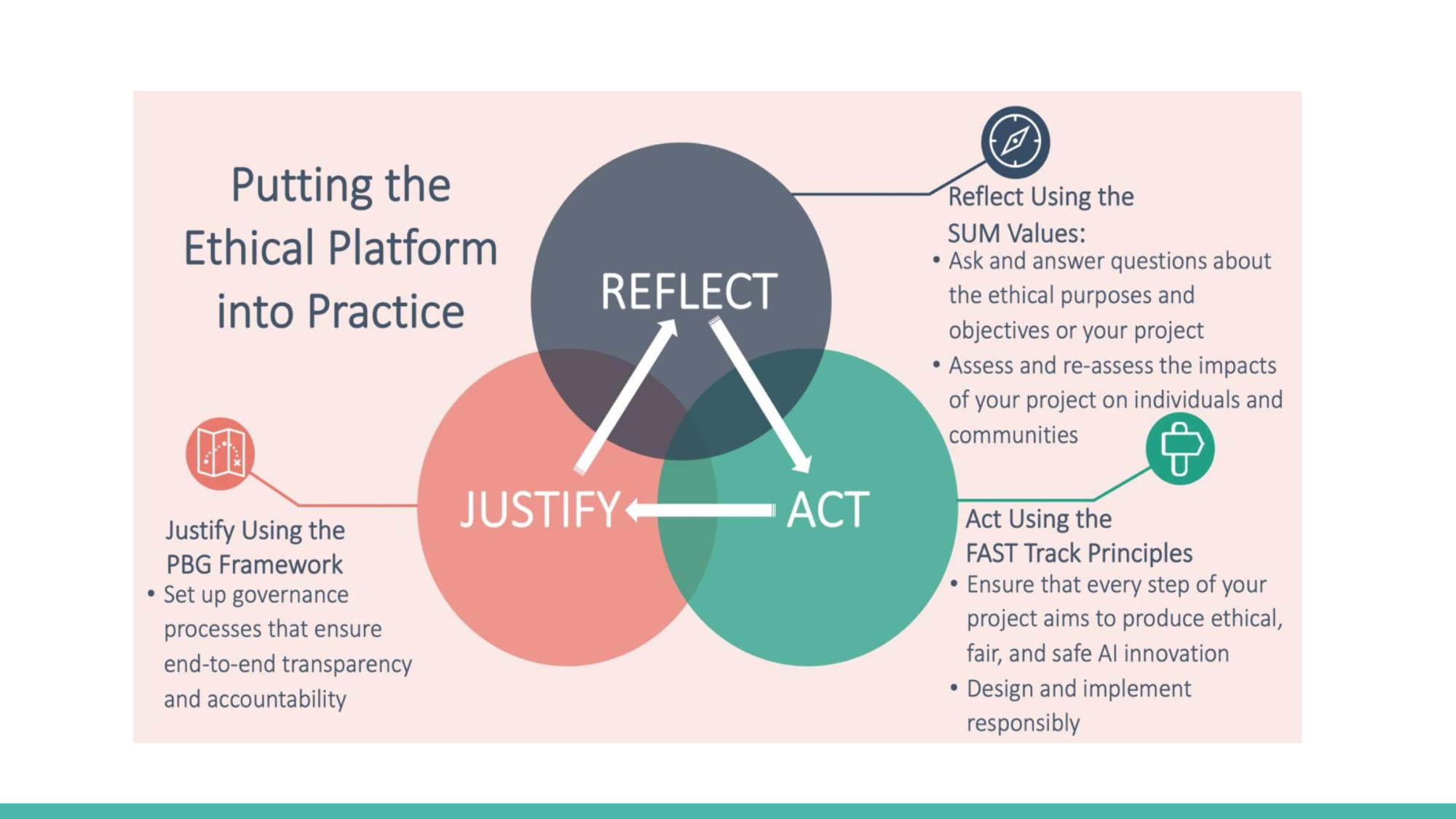

There are some ethical platforms for developing responsible AI product

- SUM Values: to provide a framework for moral scope of AI product

- FAST Track Principles: to make sure AI project is fair, bias-mitigating and reliable

- PBG Framework: to set up transparent process of AI product

|

Putting the Ethical Platform into Practice needs three key steps: reflect, act and justify:

- Reflect using the SUM values: asking and answering questions about ethical purposes and assess the impacts of AI project

- Act using FAST TRACK Principles: ensure every step of development produces safe, fair AI innovation

- Justify Using the PBG Framework: set up governance process to ensure model transparency

|

Team 1

There are many trajectories that AI development could take, so it would be very difficult to completely discount something as a possibility. Related this to “Dark Matter” book by Blake Crouch.

Risk would primarily come from bad actors (specifically humans). Briefly touched on ‘what if the bad actor is the AI?’

Team 2

The potential downfall of humans would not be due to AI’s maliciousness.

In the post-autonomous era, concerns shift to the misuse of models for harmful purposes.

Team 3

The second question seems to be already happening.

Given the rapid technological progress in recent years, single prompt can result in losing control over AI models, and speculations around ‘Q*(Q-Star)’ suggest risk in losing control over AI models, however AI’s power-seeking behavior may still be overstated.

Readings

Required: Yoshua Bengio, Geoffrey Hinton, Andrew Yao, Dawn Song, Pieter Abbeel, Yuval Noah Harari, Ya-Qin Zhang, Lan Xue, Shai Shalev-Shwartz, Gillian Hadfield, Jeff Clune, Tegan Maharaj, Frank Hutter, Atılım Güneş Baydin, Sheila McIlraith, Qiqi Gao, Ashwin Acharya, David Krueger, Anca Dragan, Philip Torr, Stuart Russell, Daniel Kahneman, Jan Brauner, Sören Mindermann. Managing AI Risks in an Era of Rapid Progress. arXiv 2023. PDFRequired: Ben Shneiderman. Bridging the Gap Between Ethics and Practice: Guidelines for Reliable, Safe, and Trustworthy Human-centered AI Systems. ACM Transactions on Interactive Intelligent Systems, October 2020. PDFOptional: David Leslie. Understanding Artificial Intelligence Ethics And Safety. arXiv 2019. PDFOptional: Joseph Carlsmith. Is Power-Seeking AI an Existential Risk?. arXiv 2022. PDFOptional: Alice Pavaloiu, Utku Kose. Ethical Artificial Intelligence - An Open Question. arXiv 2017. PDF

Questions

(Post response by Tuesday, 28 November)

Paper 1: Bridging the Gap Between Ethics and Practice

- The paper claims, “Human-centered Artificial Intelligence (HCAI) systems represent a second Copernican revolution that puts human performance and human experience at the center of design thinking." Do you agree with this quote?

- Developers/teams, organizations, users and regulators often have different views on what constitutes reliability, safety, and trustworthiness in human-centered AI systems. What are the potential challenges and solutions for aligning them? Can you provide some specific examples where these views do not align?

Paper 2: Managing AI Risks in an Era of Rapid Progress

- Do you think AI systems can be regulated over an international governance organization or agreement like nuclear weapons?

- Consider this quote from the paper: “Without sufficient caution, we may irreversibly lose control of autonomous AI systems, rendering human intervention ineffective. Large-scale cybercrime, social manipulation, and other highlighted harms could then escalate rapidly. This unchecked AI advancement could culminate in a large-scale loss of life and the biosphere, and the marginalization or even extinction of humanity.” Do you agree with it? If so, do you think any of the measures proposed in the paper would be sufficient for managing such a risk? If not, what assumptions of the authors’ that led to this conclusion do you think are invalid or unlikely?